The Gold Standard for Responsible AI Governance & Superintelligence Development

AI-IRB AI-SDLC GATES

Codified AI Governance, Ethics, and Risk Management:

Engineered to exceed typical AI governance checklists.

We are the AI-SDLC Institute: a network of AI thought leaders, practitioners, and executives setting the

Global Standard for AI governance, risk management, and superintelligence readiness.

In 5 Seconds: These gated checkpoints ensure no AI project proceeds without meeting ethical, regulatory, and risk standards mandated by the AI–IRB.

Just as the increasing power of early PCs was harnessed to usher in vast improvements in human interface designs, so too must the power of AIs engines have governance baked-in mission critical controls, checks and balances. Introducing our staged gating model which counts down from G-12 (project start) to G-0 (full release) Safe. Ignition.

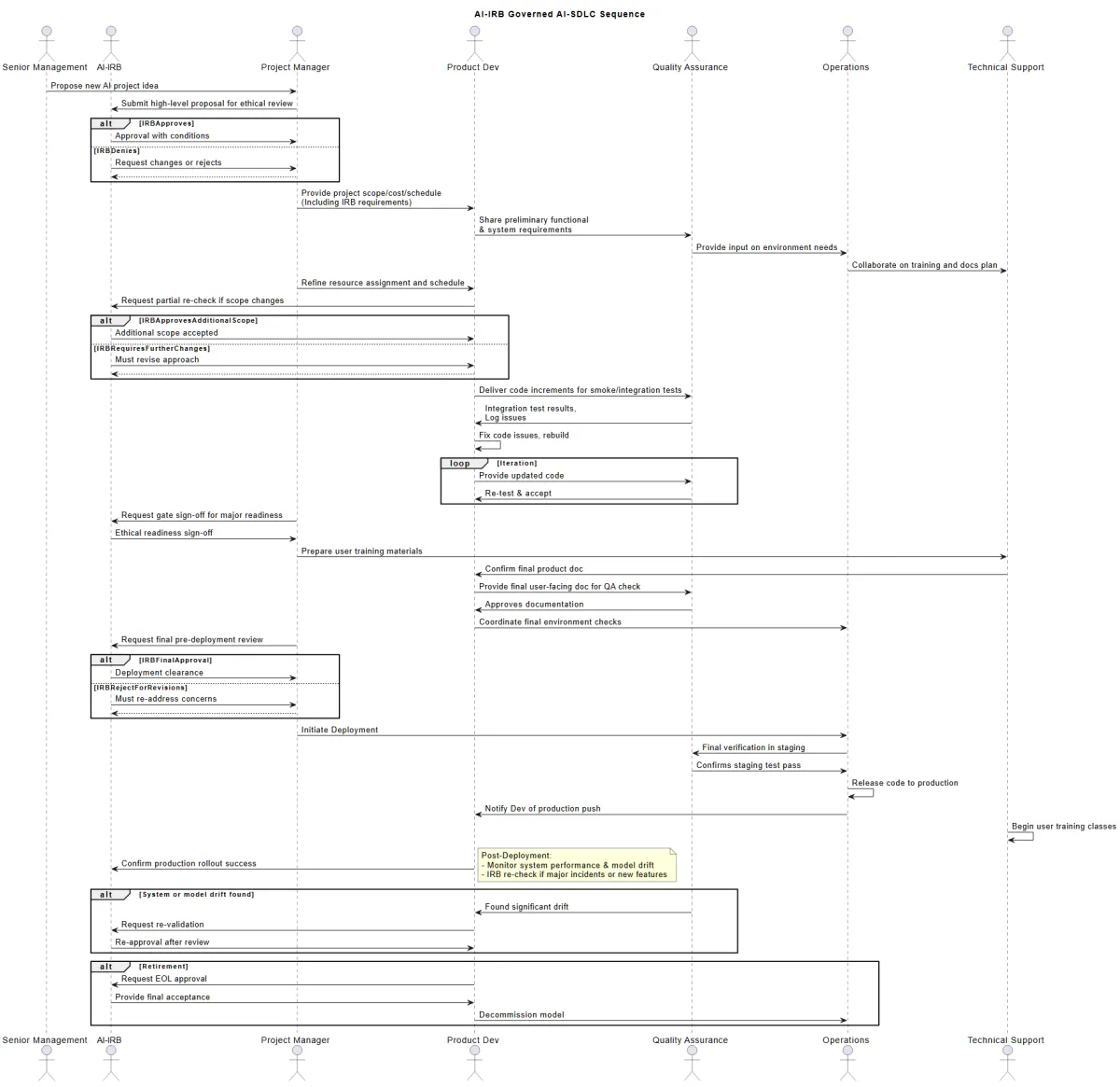

The AI–IRB Governed AI-SDLC Business Gates Blueprint

AI–IRB Governed AI-SDLC Business Gates and SOPs (below)

Governing Superintelligence

1. INTRODUCTION

1.1 Purpose

This document provides a unified description of how the AI–IRB (Artificial Intelligence Institutional Review Board) governs the Business Gates within the AI-SDLC (Artificial Intelligence System Development Life Cycle). It builds upon our prior standard operating procedures (SOPs) while explicitly incorporating ethical, regulatory, and governance considerations for AI projects.

The primary purpose of these business gates is to ensure that at critical points (or “gates”) in a project’s lifecycle, the correct decisions—encompassing cost, scope, schedule, ethical review, and risk management—are made in a transparent, structured, and traceable manner.

1.2 Objectives

Key objectives of these AI-SDLC Business Gates include:

Enhancing Integrity: Improve the integrity of commitments made by ensuring that decisions are thoroughly vetted for ethical, regulatory, and practical concerns.

Minimizing Scope Churn: Once the AI–IRB and project sponsor(s) approve a project scope, further changes should be managed through rigorous Change Control to prevent undue churn.

Common Framework: Establish a consistent method to communicate project status in terms of ethical compliance, business commitments, scheduling, resourcing, and AI-specific constraints.

Minimum Standards: Enforce the minimum criteria needed to pass each gate, including ethical risk assessment, fairness/bias analysis, AI data protections, and documentation readiness.

Measurable Quality: Provide definable milestones to collect metrics (e.g., cycle time, quality, AI model drift) that measure project success and compliance with AI–IRB mandates.

1.3 Definitions

AI–IRB (Artificial Intelligence Institutional Review Board)

A governing body that provides ethical oversight for AI-based projects, ensuring the project meets standards of fairness, transparency, accountability, privacy, and compliance with relevant regulations.

Affordability

The portion of the R&D budget allocated to the “purchase” or development of features, including advanced AI modules.

Anchor Objective

A critical feature or functionality deemed essential to the overall success—particularly relevant for AI features that drive strategic differentiation.

Baseline

(1) A specification or product deliverable that has undergone formal review and is controlled via change management.

(2) A formally established reference point for subsequent changes in scope, cost, or schedule.

Business Gate

A defined checkpoint in the AI-SDLC where significant business and ethical decisions must be made. Each gate integrates AI–IRB input (where relevant) to validate viability, risk posture, and alignment with AI governance policies.

Lock-Down

A milestone signifying mutual agreement among the Performing Organization(s), Contracting Organization(s), and the AI–IRB to deliver a specific scope within set cost, schedule, and compliance parameters.

Management Phase Review

A formal review—often triggered by gate passage—where the appropriate stakeholders and the AI–IRB can approve or modify the project’s major parameters (scope, cost, schedule, ethical posture).

Performing Organization

The group responsible for completing the project deliverables (e.g., an AI R&D team, a specialized dev/ops group). May include AI specialists, data scientists, QA, or devops.

Contracting Organization

The group that commissions the work. In many AI contexts, this may be a product or business unit requesting a specialized AI solution or enhancements to an existing platform.

Project

A temporary endeavor with defined objectives, including ethical compliance, culminating in a unique product, service, or AI model.

Service Organization

Provides deployment support, including for large-scale AI systems.

Training Organization

Provides training for internal and external users on the final AI systems, ensuring transparency and safe usage.

1.4 Abbreviations

AI–IRB: Artificial Intelligence Institutional Review Board

SDLC: System Development Life Cycle

CO: Contracting Organization

PO: Performing Organization

R&D: Research & Development

GA: General Availability

CRO: Controlled Rollout

FOA: First Office Application

1.5 References

Internal SOP documents from the AI-SDLC suite (SOP-1000-01-AI and subsequent).

AI–IRB Governance Framework referencing relevant local and international guidelines (e.g., EU AI Act, US FDA or ISO Standards for AI devices).

1.6 Scope

This document and these Business Gates apply to all AI-focused projects (and sub-projects) undertaken within the AI-SDLC environment, governed by the AI–IRB.

2. AI-SDLC BUSINESS GATES OVERVIEW

2.1 Project Lifecycle Phases

The AI-SDLC comprises five broad phases, each culminating in defined gates:

Release Planning Phase

Conduct feasibility, user requirement definitions, fairness/bias analyses.

Produce high-level estimates aligned with the capacity of the performing organization.

Undergo initial AI–IRB feasibility review to confirm ethical viability.

Definition Phase

Translate user requirements into system requirements with AI-specific elements.

Finalize design constraints around data protection, transparency, bias prevention.

Engage AI–IRB for more in-depth ethical risk assessments as needed.

Development Phase

Complete coded implementations of AI models, user interfaces, and data pipelines.

Undertake unit, integration, and early model validation tests with QA.

The AI–IRB is informed if major changes or new ethical concerns arise.

Validation Phase

Validate integrated solutions via system-level tests, stress tests, AI fairness checks, and regulatory compliance.

If FOA or Beta environment is required, partial deployments can be performed.

The AI–IRB provides final or near-final ethical clearance.

Deployment Phase

Transition the completed AI solution into production, typically with FOA or a controlled rollout.

Post-deployment monitoring addresses model drift, anomalies, or emergent ethical issues.

Project concludes with final sign-off by the AI–IRB if all conditions are satisfied.

2.2 Management Phase Reviews

These revolve around the AI–IRB’s ethical oversight. Additional stakeholder involvement includes senior management, project sponsors, contracting organizations, and performing organizations.

The key management reviews are:

G-11: Confirms project strategy, anchor objectives, and AI–IRB’s initial ethical sign-off.

G-10: Commits to definition phase, verifying readiness for deeper risk and data compliance checks.

G-6: The essential Lock-Down, signifying final scope agreement with the AI–IRB's go-ahead.

G-4: Ensures readiness for large-scale validation and compliance.

G-2: Authorizes FOA or Beta deployment, verifying that AI–IRB concerns are resolved.

G-0: Confirms or denies general release, concluding the project for full production usage.

2.3 Standard Gates

Below is the complete list of gates that coordinate standard business and ethical decisions:

G-12: Project Start

G-11: Project Strategy Lock-Down

G-10: Requirements Scope Lock-Down

G-9: Definition Phase Plan Approved

G-8: System Requirements Definition Approved

G-7: Lock-Down Level Estimates Complete

G-6: Project Lock-Down

G-5: Detailed Plans Complete

G-4: Begin Validation

G-3: Begin System Certification

G-2: Begin FOA

G-1: Begin Controlled Rollout

G-0: General Availability

2.4 Project Change Control

Because AI solutions evolve rapidly (model updates, data changes, regulatory shifts), changes must be proactively managed. Each proposed product scope change triggers a formal “Change Request,” assessed by a cross-functional review board, including the AI–IRB. Large changes effectively re-enter the business gates.

Common change types:

AI Model Upgrades: Additional features or major re-training.

Schedule Deviations: Shifts in timeline that can impact data readiness or compliance checks.

Scope Adjustments: Add/remove AI-driven functionalities, possibly due to new ethical constraints or user requests.

2.5 Gate Nomenclature

G-1, G-2, etc., remain the standard. Additional suffixes (like G-6.1) handle sub-phases or repeated cycles, especially for iterative model retraining or incremental rollouts.

2.6 Structure of This Document

Section 3: Explains gate structure and key components.

Section 4: Details minimum standard requirements for each gate.

Section 5: Discusses real-world tailoring and how the AI–IRB interacts within each gate.

3. GATE STRUCTURE AND COMPONENTS

Each gate includes:

Gate Objective: The business/ethical goal.

Gate Owner: Accountable for ensuring the gate’s criteria are met (often the Project Manager plus AI–IRB for AI-ethics aspects).

Review Board: Approves gate passage; includes the AI–IRB for key checks.

Requirements: Deliverables, such as updated data usage risk reports, code or architecture specifications, or AI–IRB checklists.

Supplier and Receiver: The entity that produces each requirement and the entity that formally accepts it.

Acceptance Criteria: Each requirement’s compliance standards, which can include AI fairness metrics or a data privacy threshold.

If a gate is not met, the gate owner triggers escalation or addresses action items (such as re-analysis, re-estimation, or additional AI–IRB clarifications).

4. MINIMUM STANDARD REQUIREMENTS

This section defines the baseline requirements for each gate. Projects can add extra gating steps. All references to the AI–IRB indicate where ethical or compliance considerations are integrated.

4.1 Assumptions and Pre-requisites

Project Sponsor Assigned: Before G-12, a sponsor who has oversight and resources must be in place.

AI–IRB Engagement: The AI–IRB is introduced at the earliest gate (G-12) to confirm the project’s AI readiness and ethical viability.

4.2 Gate: G-12 – Project Start

Objective: Formally kick off the project.

Owner: Project Sponsor

Review Board: N/A (Often minimal or internal)

Requirements:

Assigned Sponsor

Preliminary high-level concept with potential AI–IRB mention

Basic documentation of scope/time/cost feasibility

4.3 Gate: G-11 – Project Strategy Lock-Down

Objective: Approve the set of features, anchor objectives, and early ethical constraints.

Owner: Project Manager

Review Board: Sponsor, PM, Contracting Org, AI–IRB

Requirements:

Market Requirements: Must include any AI–IRB concerns.

High-Level Effort Estimate: Quick resource estimate.

Project Strategy: R&D budget, anchor objectives, timeline.

AI–IRB Ethical Scope: Preliminary risk scoping, fairness considerations.

4.4 Gate: G-10 – Requirements Scope Lock-Down

Objective: Formalize the user and system requirements for deeper design.

Owner: Project Manager

Review Board: Project Sponsor, AI–IRB, Program Management, etc.

Requirements:

Initial portfolio scope.

Resource plan (including AI experts, data domain specialists).

Business plan impact, with ethical constraints (privacy approach, data usage).Gate sign-off.

4.5 Gate: G-9 – Definition Phase Plan Approved

Objective: Sign off on the plan to complete the definition phase.

Owner: Project Manager

Review Board: Sponsor, PM, AI–IRB if major design/ethics changes.

Requirements:

High-level plan with risk approach.

Detailed schedule for definition tasks (model architecture, data readiness).

4.6 Gate: G-8 – System Requirements Definition Approved

Objective: Contracting Org approves the system-level requirements.

Owner: Project Manager

Review Board: PM, Contracting Org, AI–IRB

Requirements:

Final system requirements, including AI model specs, data-limits, compliance constraints.

All traceable to the earlier business requirements.

4.7 Gate: G-7 – Lock-Down Level Estimates Complete

Objective: Completion of estimates for upcoming phases, ensuring readiness for G-6.Owner: Project Manager

Review Board: PM, AI–IRB (if large scope changes).

Requirements:

Refined resource estimates (data labeling, HPC resources, ops).

Final portfolio scope.

Preliminary WBS.

4.8 Gate: G-6 – Project Lock-Down

Objective: Achieve official commitment among Contracting, Performing Orgs, and the AI–IRB.

Owner: Project Manager

Review Board: Sponsor, AI–IRB, Contracting Org, Service Org.

Requirements:

Project plan with validated scope/cost/schedule.

Updated Deployment Plan referencing AI compliance.

Service Plan for support.

Lock-down baseline with potential re-check from AI–IRB.

4.9 Gate: G-5 – Detailed Plans Complete

Objective: The project team finalizes the detailed design/plan.

Owner: Project Manager

Review Board: Sponsor, Dev, AI–IRB if major changes arise.

Requirements:

Detailed WBS for the development phase.

Detailed design specs for any AI modules.

Final acceptance of integration strategy.

4.10 Gate: G-4 – Begin Validation

Objective: Assess readiness for advanced validation (functional tests, stress tests, AI fairness checks).

Owner: QA (Performing Org)

Review Board: PM, AI–IRB

Requirements:

Code complete or near-complete for relevant increments.

Integration test results, readiness to expand testing to full system.

Preliminary product documentation.

QA’s sign-off that system meets minimal ethical, fairness, performance constraints.

4.11 Gate: G-3 – Begin System Certification

Objective: Confirm readiness to commence system-level or external certification tests.

Owner: Contracting Org or external test authority

Review Board: PM, AI–IRB, relevant test/cert bodies.

Requirements:

System test results from the previous phase.

Evidence of compliance with AI–IRB’s major conditions.

4.12 Gate: G-2 – Begin FOA

Objective: Authorize initial deployment in a pilot environment or FOA setting.

Owner: Contracting Org

Review Board: Sponsor, AI–IRB, Program Management, etc.

Requirements:

FOA release package tested and validated.

No major (P1/P2) open issues.

FOA site selection.

Risk plan addressing any privacy or fairness concerns in production.

4.13 Gate: G-1 – Begin Controlled Rollout

Objective: For projects that require a staged deployment, this gate ensures readiness to scale up.

Owner: Contracting Org

Review Board: Sponsor, PM, AI–IRB

Requirements:

Verified success in FOA environment.

Additional user training or documentation.

Possibly updated risk or drift monitoring approach.

4.14 Gate: G-0 – General Availability

Objective: Approve broad release for indefinite production usage.

Owner: Contracting Org

Review Board: Sponsor, AI–IRB, Dev, Service Org

Requirements:

Completed FOA/CRO results with no critical issues.

Final marketing, training, or compliance materials.

Confirmation from AI–IRB that ethical parameters are satisfied.

5. IMPLEMENTATION AND TAILORING

5.1 Gate Objectives

The high-level objectives for each standard gate cannot be modified, to retain consistency for cross-organizational alignment.

5.2 Additional Gates

Each performing organization may define more gates to reflect unique AI-lifecycle tasks (e.g., advanced fairness testing or secure multi-party computations).

5.3 Iterated Gates

When the project is done in distinct increments (like multiple FOA cycles or iterative model releases), the relevant gates are repeated for each cycle.

5.4 Waiving Gates

Although some gates (like G-1) may be optional, skipping standard gates requires formal sign-off by the project sponsor and AI–IRB, documented in the project plan.

5.5 Specific Requirements

Beyond the mandatory items, each gate can add additional AI or compliance deliverables. For instance, G-8 might require advanced model interpretability artifacts.

5.6 Roles and Responsibilities

Each gate’s roles must be explicitly assigned and documented. AI–IRB membership or delegates typically appear in all major gates from G-12 to G-0.

OWNERS:

CTO / AI–IRB Head: [Name/Contact Info]

Program Management: [Name/Contact Info]

Copyright © 2025 - All rights reserved to AI–IRB Governed AI-SDLC.Institute

AI-SDLC.Institute SOP Library

Comprehensive SOP List: AI-IRB Governed AI-SDLC

SOP-1000-01-AI: AI-Integrated Program/Project Management

Clarifies roles and responsibilities for AI-related tasks.

Describes program charter creation, milestone tracking, risk management.

Establishes processes for obtaining AI-IRB approvals at critical points.

SOP-1001-01-AI: Document Governance and AI-IRB Compliance

Purpose: Governs the creation, review, revision, and archiving of documents, ensuring AI-IRB compliance and alignment with regulatory requirements.

Key Points:

Document control procedures (versioning, approval matrix).

AI-IRB mandated sign-offs for changes.

Secure document repository and retention rules.

SOP-1002-01-AI: Capacity Management (AI-Integrated)

Purpose: Outlines methods to forecast, allocate, and manage compute and data capacity for AI solutions, factoring in ML model training and inference workloads.

Key Points:

Resource usage tracking for training/inference.

Monitoring of AI load to ensure system reliability.

Threshold-based triggers for additional resources.

SOP-1003-01-AI: Configuration Management

Purpose: Ensures consistent configuration of AI system components (models, data pipelines, supporting infrastructure).

Key Points:

Baseline tracking of model versions, datasets, dependencies.

Version control guidelines for code, AI model artifacts.

Procedures for controlled changes and rollbacks.

SOP-1004-01-AI: Procurement and Purchasing for AI-Enabled Systems

Purpose: Standardizes the acquisition process for AI hardware, software, external datasets, and consulting services.

Key Points:

AI-IRB screening for potential ethical or compliance issues in new tools.

Vendor due diligence for bias and data privacy compliance.

Ensures budget approvals align with project scope.

SOP-1005-01-AI: AI-Integrated Release Planning

Purpose: Integrates AI roadmaps and iteration cycles into the standard SDLC release planning.

Key Points:

AI feature backlog refinement, prioritization, and gating by AI-IRB.

Roadmap alignment with data readiness and capacity constraints.

Triggers for re-validation if new models are introduced.

SOP-1006-01-AI: AI-IRB Engagement and Ethical Review Procedure

Purpose: Provides the route for engaging with the AI-IRB to secure ethical clearances, especially for new or high-impact AI features.

Key Points:

Formal submission for ethical risk reviews.

Communication channels for clarifications and re-approvals.

Records of IRB decisions and any mandated conditions.

SOP-1007-01-AI: AI Asset Management

Purpose: Tracks and manages AI hardware, software licenses, pretrained model assets, and data assets across the organization.

Key Points:

Lifecycle tracking from acquisition to retirement.

Warranty, licensing compliance, and usage monitoring.

Asset modifications or reassignments require version logs.

SOP-1008-01-AI: AI Incident and Escalation Management

Purpose: Details how to handle real-time AI production incidents, anomalies, or emergent model misbehavior, including escalation to AI-IRB if ethics-related.

Key Points:

Tiered incident severity definitions for AI anomalies.

Communication guidelines and immediate fix or rollback.

Post-incident root cause analysis to incorporate lessons learned.

SOP-1009-01-AI: AI Model Drift and Re-Validation Procedure

Purpose: Ensures periodic checks for model drift (performance degradation or domain shifts) and triggers re-validation cycles.

Key Points:

Metrics for drift detection.

Retraining or model retirement guidelines.

AI-IRB involvement if drift implicates fairness or ethics.

SOP-1010-01-AI: AI-SDLC Site Monitoring and Incident Management

Purpose: Focuses on 24/7 site monitoring for AI-related production issues, bridging with general operations incident management.

Key Points:

Real-time site monitoring for model performance metrics.

Coordinated escalation if system stability is threatened.

Maintains ongoing compliance with SLA targets.

SOP-1011-01-AI: AI Feature Decommissioning and Model Retirement

Purpose: Lays out a structured approach to retire an AI feature or fully remove a model from production.

Key Points:

Checklist for shutting down active inferences.

Handling dependent functionalities or user workflows.

Archival of associated data and code artifacts.

SOP-1012-01-AI: AI Model Explainability and Interpretability Procedure

Purpose: Establishes mandatory steps to ensure each AI model includes interpretability features and relevant user/engineer documentation.

Key Points:

Setting up model explainers (e.g., SHAP, LIME).

Auditable logs explaining decisions.

IRB checks for transparency levels required.

SOP-1013-01-AI: AI Model Post-Production Monitoring and Ongoing Validation

Purpose: Mandates continuous monitoring of model KPIs and triggers re-validation if significant changes occur in performance or data distributions.

Key Points:

Metrics and thresholds for performance, bias, or drift.

Automated alerts to responsible teams.

Frequencies for scheduled health checks.

SOP-1014-01-AI: Regulatory & Ethical AI Compliance Verification

Purpose: Confirm compliance with relevant laws and internal policy for AI solutions (GDPR, CCPA, internal ethics charters, etc.).

Key Points:

Checklists for privacy laws, disclaimers, user consent.

AI-IRB involvement for expansions of scope or new data usage.

Audit trail for all compliance checks.

SOP-1015-01-AI: AI Knowledge Transfer and Handover Procedure

Purpose: Ensures structured knowledge transfer for new AI solutions from the development team to operational owners or support staff.

Key Points:

Documented training sessions, including final readouts.

Code tours, pipeline diagrams, environment replication steps.

Final handover acceptance.

SOP-1020-01-AI: AI Model Lifecycle Management

Purpose: Provides a meta-view of an AI model’s lifespan, from initial concept and prototyping to deployment, maintenance, and eventual retirement.

Key Points:

Stage gates aligned with AI-IRB reviews.

Criteria for scaling up from proof-of-concept to production.

End-of-life triggers and data final dispositions.

SOP-1030-01-AI: AI-Ad Hoc Reporting Procedure

Purpose: Governs how internal teams request quick-turnaround or one-time analysis from existing AI models or data sets.

Key Points:

Quick security/privacy checks for new requests.

IRB oversight if the request expands original data usage.

Prompt escalation or revision if scope creeps.

SOP-1040-01-AI: Requirements Definition

Purpose: Identifies how to capture functional and non-functional requirements for AI solutions, including data needs, acceptance criteria, and AI-IRB constraints.

Key Points:

AI-IRB gating for sensitive data usage or high-risk features.

Clear alignment of acceptance test cases.

Cross-functional reviews for risk, compliance, feasibility.

SOP-1050-01-AI: AI Security Administration and Governance

Purpose: Controls security measures around AI systems: data encryption, API access, key management, and vulnerability scanning for AI pipelines.

Key Points:

AI platform security, software supply chain management.

Periodic vulnerability scans on AI code and dependencies.

Zero-trust posture, especially for privileged AI model endpoints.

SOP-1051-01-AI: Security Administration and Oversight

Purpose: Provides an overarching approach to user account management, privileged account controls, and periodic reviews of access logs for the entire environment.

Key Points:

Role-based access control, especially for data scientists with production data.

Security posture reviews by AI-IRB if emergent risk.

Master accounts and restricted user IDs tracked.

SOP-1052-01-AI: AI Model Lifecycle Oversight and Governance

Purpose: AI-IRB overview ensuring all major steps in a model’s lifecycle comply with established ethical and regulatory frameworks.

Key Points:

Aligns with SOP-1020 but focuses on IRB gating.

Triage critical or sensitive updates for immediate IRB review.

Mandates final sign-off at each milestone.

SOP-1053-01-AI: Ethical Risk Assessment & Mitigation

Purpose: Mandates regular ethical risk assessment (diversity, bias, societal impact) for AI solutions and prescribes mitigation actions.

Key Points:

Periodic ethical risk reviews.

Mitigation plan sign-off by AI-IRB.

Documentation of residual risk acceptance.

SOP-1054-01-AI: AI-Regulated Project Approvals and Sponsorship

Purpose: Documents how AI-IRB obtains the necessary cross-functional approvals and sponsorship for regulated AI projects.

Key Points:

Funding and oversight checkpoints.

Sponsor sign-off from Senior Management.

IRB-structured gating across project phases.

SOP-1055-01-AI: Computer System Controls

Purpose: Ensures that all computing environments that host or serve AI models meet uniform control standards (SOX, HIPAA, ISO).

Key Points:

Automated logging, compliance requirements, access restrictions.

Physical and logical security for server rooms / cloud.

Periodic control audits and recertifications.

SOP-1060-01-AI: Service Level Agreement

Purpose: Stipulates minimum performance, availability, and support commitments for AI solutions.

Key Points:

Defines KPIs such as inference latency and uptime.

Penalties or escalation for repeated SLA breaches.

Review schedule for SLA adjustments.

SOP-1061-01-AI: Incident Tracking

Purpose: Comprehensive approach to log, categorize, and track incidents involving AI, from minor anomalies to critical outages.

Key Points:

Triage rules for severity.

Root cause analysis must consider model aspects.

Post-mortem that can trigger new IRB reviews if changes are required.

SOP-1100-01-AI: Documentation of Training

Purpose: Records job-related training for staff engaged in AI functions and compliance with policy or regulatory demands (AI-IRB included).

Key Points:

Documents training events and curricula.

Ensures staff have required AI knowledge, including bias/fairness.

Central repository of training logs for audits.

SOP-1101-01-AI: Training and Documentation

Purpose: Creates and maintains user instructions, training materials, knowledge base for newly delivered AI solutions.

Key Points:

Trainer selection from Technical Support or SMEs.

Product documentation readiness and acceptance by QA.

Post-training surveys and feedback loops.

SOP-1200-01-AI: Development

Purpose: Outlines coding, integration, unit test strategies specifically for AI components, referencing data pipelines and ML frameworks.

Key Points:

Emphasizes code reviews, test coverage for ML logic.

Aligns with environment config from SOP-1003.

Tools, branches, merges, and iteration cycles.

SOP-1210-01-AI: Quality Function

Purpose: Details test strategy, integration test plan, QA acceptance for both standard software and AI components (performance, bias, correctness).

Key Points:

System test includes functional, load, and regression tests.

Model performance acceptance criteria.

QA gate sign-off required prior to release.

SOP-1220-01-AI: Deployment

Purpose: Final rollout and push to production for AI solutions. Checks that AI-IRB’s final sign-off is present, and that relevant training is complete.

Key Points:

Transition from QA/staging to production.

Monitoring for immediate post-release anomalies.

Post-deployment review and lessons learned.

SOP-1300-01-AI: AI-IRB Governance & Oversight

Purpose: Defines the role, responsibilities, and authority of the AI-IRB to enforce ethical, regulatory, and operational checks.

Key Points:

Composition, decision-making process, meeting intervals.

Mandated reviews for high-impact or ethically sensitive AI projects.

Documentation of all IRB judgments or waivers.

SOP-1301-01-AI: AI Bias & Fairness Evaluation

Purpose: Mandates methods for detecting and mitigating bias within AI models, ensuring fairness across protected classes.

Key Points:

Tools and metrics for measuring bias.

IRB audits for critical classes (e.g., race, gender).

Remediation steps and retesting.

SOP-1302-01-AI: AI Explainability & Model Transparency

Purpose: Ensures that each AI model can be explained at an appropriate level to both internal stakeholders and external regulators/users.

Key Points:

Tracking of model interpretability methods.

Documentation or instrumentation for real-time interpretability.

IRB sign-off for permissible black-box levels if truly necessary.

SOP-1303-01-AI: AI Data Protection & Privacy

Purpose: Protects data used in AI systems from unauthorized access, ensuring compliance with relevant privacy laws (GDPR, HIPAA, etc.).

Key Points:

Pseudonymization or anonymization approach.

Secure data pipelines, encryption at rest/in transit.

IRB data usage approvals or rejections.

SOP-1304-01-AI: AI Validation & Monitoring

Purpose: Ongoing process to validate AI models’ correctness, reliability, and compliance after the initial deployment.

Key Points:

Monitoring plan for performance, unexpected behaviors.

Automated triggers for re-validation.

AI-IRB audit logs for significant anomalies.

SOP-1305-01-AI: AI Ethical Risk & Impact Assessment

Purpose: Formal assessment of the broader societal and ethical impacts of an AI initiative, ensuring that all relevant stakeholders and impacted parties are considered.

Key Points:

Comprehensive methodology for risk identification and rating.

Stakeholder consultation (including vulnerable populations).

Documentation of mitigations and sign-off by IRB.

SOP-1306-01-AI: AI End-of-Life & Sunset Process

Purpose: Provides a structured approach for decommissioning AI models that have outlived their useful or safe lifecycle.

Key Points:

AI-IRB verification that all obligations and user impacts are addressed.

Data and model archiving or destruction.

Post-sunset review of lessons learned.

SOP-2002-01: Control of Quality Records

Purpose: Governs management of all quality records and associated evidence for the entire AI-SDLC (including sign-offs, IRB documents, test logs).

Key Points:

Retention schedules, versioning, authorized destruction.

Cross-referencing for audits.

Accessibility and security for critical records.

Copyright © 2025 - All rights reserved to AI–IRB Governed AI-SDLC.Institute